Category: spin in science writing

Spin in reporting a case-control study

This post follows up on my previous post on the same topic of cannabis use and psychosis. I was inspired by a podcast presented by Matt, Chris, and Don from the Population Health Exchange of the Boston University School Health Public Health. My focus here is on the “spinning in science writing”. About the study This was a multi-centre case-control study, which involved several countries. It appeared in The Lancet Psychiatry in 2019 under the title, “the contribution of cannabis use to variation in the incidence of psychotic disorders across Europe (EU-GEI): “A multicenter case-control study”: You can access the…

Spin in Psychiatry and psychology research abstracts

This post looks at how misreporting (spin) occurs in psychiatry and psychology research, more specifically in its abstracts. In 2019, Samuel Jellison and his team looked into this. We will see what they found. They reviewed research papers published between January 2012 and December 2017. Of the located 116 papers, they concluded that 65 papers (that amounts to 56 per cent) with distorted reporting in the research abstracts. Interestingly, they could not find any significant association with the funding source; in other words, the spin did not vary whether the funding was from a for-profit industry source or not. How…

Not reporting negative results is a spin

Most often, we read only abstracts of research papers. We trust the researchers report their findings accurately. It does don’t happen always as we expect, particularly when the research ends up with non-significant primary outcomes. Instead, we find only significant secondary outcomes. This is also a type of spin and academic mischief. And, sometimes, we do not even find significant adverse effects due to the study intervention as well. These types of spin occur in randomized trials. We know that randomized trials carry the highest level of evidence strength in research. In this study design, we compare a new treatment…

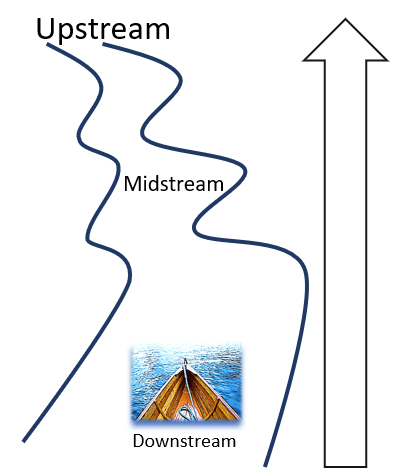

Spinning in writing recommendations of observation study results

In my previous post, I dealt with how spin occurs in reporting observational studies: The use of causal language in reporting findings of observational studies. This post adds another spin method that occurs frequently in reporting results of observational studies: making recommendations based on results from observational study designs. Observational studies are very useful in science; however, we cannot make clinical recommendations for practice based on findings only from observational studies. This is because these study designs allow us only to determine prevalence, and incidence or demonstrate either associations or correlations. And, indeed, it does not allow inferring causation. However,…

Distortion in reporting observational studies

Image by StartupStockPhotos from Pixabay The distortion of research findings happens; it is a big problem; it is scientific mischief, Robert H Fletcher 1 and Bert Black reported in the Medical Law journal in 2007. Sometimes, researchers do that consciously but not always; it can also happen unconsciously. Spin in scientific reporting can result in profound negative implications, not only for our health but in the legal sector also. Spin occurs when we interpret data because our interpretations are subjective. In this Tasnim Elmamoun‘s post, on the recognising spin in scientific literature, published on the PLOS SciComm Blogs, this discussion goes deeper….